verde.BlockKFold¶

-

class

verde.BlockKFold(spacing=None, shape=None, n_splits=5, shuffle=False, random_state=None, balance=True)[source]¶ K-Folds over spatial blocks cross-validator.

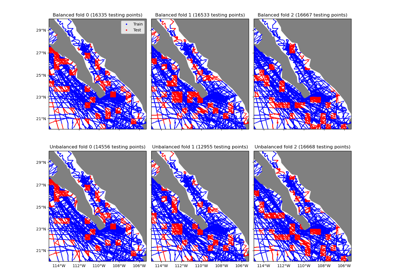

Yields indices to split data into training and test sets. Data are first grouped into rectangular blocks of size given by the spacing argument. Alternatively, blocks can be defined by the number of blocks in each dimension using the shape argument instead of spacing. The blocks are then split into testing and training sets iteratively along k folds of the data (k is given by n_splits).

By default, the blocks are split into folds in a way that makes each fold have approximately the same number of data points. Sometimes this might not be possible, which can happen if the number of splits is close to the number of blocks. In these cases, each fold will have the same number of blocks, regardless of how many data points are in each block. This behaviour can also be disabled by setting

balance=False.Shuffling the blocks prior to splitting is strongly encouraged. Not shuffling will essentially lead to the creation of n_splits large blocks since blocks are spatially adjacent when not shuffled. The default behaviour is not to shuffle for compatibility with similar cross-validators in scikit-learn.

This cross-validator is preferred over

sklearn.model_selection.KFoldfor spatial data to avoid overestimating cross-validation scores. This can happen because of the inherent autocorrelation that is usually associated with this type of data (points that are close together are more likely to have similar values). See [Roberts_etal2017] for an overview of this topic.- Parameters

spacing (float, tuple = (s_north, s_east), or None) – The block size in the South-North and West-East directions, respectively. A single value means that the spacing is equal in both directions. If None, then shape must be provided.

shape (tuple = (n_north, n_east) or None) – The number of blocks in the South-North and West-East directions, respectively. If None, then spacing must be provided.

n_splits (int) – Number of folds. Must be at least 2.

shuffle (bool) – Whether to shuffle the data before splitting into batches.

random_state (int, RandomState instance or None, optional (default=None)) – If int, random_state is the seed used by the random number generator; If RandomState instance, random_state is the random number generator; If None, the random number generator is the RandomState instance used by np.random.

balance (bool) – Whether or not to split blocks into fold with approximately equal number of data points. If False, each fold will have the same number of blocks (which can have different number of data points in them).

See also

train_test_splitSplit a dataset into a training and a testing set.

cross_val_scoreScore an estimator/gridder using cross-validation.

Examples

>>> from verde import grid_coordinates >>> import numpy as np >>> # Make a regular grid of data points >>> coords = grid_coordinates(region=(0, 3, -10, -7), spacing=1) >>> # Need to convert the coordinates into a feature matrix >>> X = np.transpose([i.ravel() for i in coords]) >>> kfold = BlockKFold(spacing=1.5, n_splits=4) >>> # These are the 1D indices of the points belonging to each set >>> for train, test in kfold.split(X): ... print("Train: {} Test: {}".format(train, test)) Train: [ 2 3 6 7 8 9 10 11 12 13 14 15] Test: [0 1 4 5] Train: [ 0 1 4 5 8 9 10 11 12 13 14 15] Test: [2 3 6 7] Train: [ 0 1 2 3 4 5 6 7 10 11 14 15] Test: [ 8 9 12 13] Train: [ 0 1 2 3 4 5 6 7 8 9 12 13] Test: [10 11 14 15] >>> # A better way to visualize this is to create a 2D array and put >>> # "train" or "test" in the corresponding locations. >>> shape = coords[0].shape >>> mask = np.full(shape=shape, fill_value=" ") >>> for iteration, (train, test) in enumerate(kfold.split(X)): ... # The index needs to be converted to 2D so we can index our matrix. ... mask[np.unravel_index(train, shape)] = "train" ... mask[np.unravel_index(test, shape)] = " test" ... print("Iteration {}:".format(iteration)) ... print(mask) Iteration 0: [[' test' ' test' 'train' 'train'] [' test' ' test' 'train' 'train'] ['train' 'train' 'train' 'train'] ['train' 'train' 'train' 'train']] Iteration 1: [['train' 'train' ' test' ' test'] ['train' 'train' ' test' ' test'] ['train' 'train' 'train' 'train'] ['train' 'train' 'train' 'train']] Iteration 2: [['train' 'train' 'train' 'train'] ['train' 'train' 'train' 'train'] [' test' ' test' 'train' 'train'] [' test' ' test' 'train' 'train']] Iteration 3: [['train' 'train' 'train' 'train'] ['train' 'train' 'train' 'train'] ['train' 'train' ' test' ' test'] ['train' 'train' ' test' ' test']]

For spatial data, it’s often good to shuffle the blocks before assigning them to folds:

>>> # Set the random_state to make sure we always get the same result. >>> kfold = BlockKFold( ... spacing=1.5, n_splits=4, shuffle=True, random_state=123, ... ) >>> for train, test in kfold.split(X): ... print("Train: {} Test: {}".format(train, test)) Train: [ 0 1 2 3 4 5 6 7 8 9 12 13] Test: [10 11 14 15] Train: [ 2 3 6 7 8 9 10 11 12 13 14 15] Test: [0 1 4 5] Train: [ 0 1 4 5 8 9 10 11 12 13 14 15] Test: [2 3 6 7] Train: [ 0 1 2 3 4 5 6 7 10 11 14 15] Test: [ 8 9 12 13]

These should be the same splits as we got before but in a different order. This only happens because in this example we have the number of splits equal to the number of blocks (4). With real data the effects would be more dramatic.

Methods Summary

|

Returns the number of splitting iterations in the cross-validator |

|

Generate indices to split data into training and test set. |

-

BlockKFold.get_n_splits(X=None, y=None, groups=None)¶ Returns the number of splitting iterations in the cross-validator

-

BlockKFold.split(X, y=None, groups=None)¶ Generate indices to split data into training and test set.

- Parameters

X (array-like, shape (n_samples, 2)) – Columns should be the easting and northing coordinates of data points, respectively.

y (array-like, shape (n_samples,)) – The target variable for supervised learning problems. Always ignored.

groups (array-like, with shape (n_samples,), optional) – Group labels for the samples used while splitting the dataset into train/test set. Always ignored.

- Yields

train (ndarray) – The training set indices for that split.

test (ndarray) – The testing set indices for that split.